Did you know that you can expose your Kettle transformation quite easily as a web service? I will quickly walk you through the setup based on a very simple example:

Imagine we are a UK store selling second hand lenses. We store our inventory in a database, prices are in GBP, we want to convert them to USD as well as we have some customers in the US. Furthermore, we want to give the calling app the possibility to retrieve a list from our inventory based on the lens mount.

This is how our transformation looks like (for simplicity and so that you can easily run the transformation on your side I used a fixed sample dataset and a filter to simulate a db input):

We set up our transformation to accept a parameter called VAR_LENS_MOUNT. We retrieve the value of this parameter using the Get Variables set and join it to the main flow. Then we use the Filter rows step to keep only records that match the requested lens mount value. As said, normally we would have a Table input step instead and write a query therefore (but then you cannot run my sample as easily).

The next task is to get the current conversion rate GBP to USD. We use the HTTP Web Service step therefore with following URL:

http://finance.yahoo.com/d/quotes.csv?e=.csv&f=c4l1&s=GBPUSD=X

Thanks to Yahoo we can retrieve this conversion rate for free. We do some post processing to extract and clean the values and finally join them to the main stream. Next we use the Calculator step to calculate the amount of USD based on the conversion rate we just retrieved.

The final step is to set up the Json output set:

Set the Json output step up like this:

- Make sure that Nr of rows in a block is left empty so that all rows get output inside one block only.

- Tick Pass output to servlet.

Click on the Fields tab and then on Get Fields to auto-populate the grid:

Save your transformation.

Next let’s start Carte. Navigate inside your PDI directory and run:

sh carte.sh localhost 8181

This will start Carte on your local machine on port 8181.

To make sure that the server is running properly, go to your favorite web browser:

http://localhost:8181/

Provide the following default username and password: cluster, cluster

Let’s call the web service from your favorite web browser:

You don’t have to copy the ktr to the server, just leave it where it is.

Just run this URL:

http://127.0.0.1:8181/kettle/executeTrans/?trans=%2Fhome%2Fdsteiner%2FDropbox%2Fpentaho%2FExamples%2FPDI%2Fexpose-ktr-as-web-service%2Fsample2.ktr&VAR_LENS_MOUNT=m42

You can see that we specify the full path to our transformation. Make sure you replace forward slashes with: %2F

If you have blanks in your filename, you can just leave them there.

Any parameters are just passed along: Use exactly the same name in the transformation. In this case we only wanted to retrieve a list of m42 mount fit lenses.

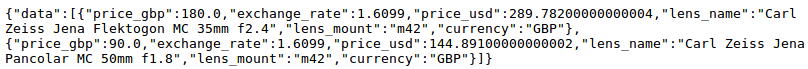

Now you can see the json output in your browser:

Other steps that support the Pass output to servlet option are the Text file output and the XML output. In this example we just accessed our transformation directly on the file system from Carte. Alternatively you can configure Carte as well to access the transformation from a repository.

You can download the sample file from here.

For more info have a look at Matt's blog post on the same subject and the Pentaho Wiki (here).

0 comments:

Post a Comment